2D Guidance in Minimally Invasive Procedures

Research Strategy

(a) SIGNIFICANCE: The use of two-dimensional (2D) Ultrasound (US) guidance in minimally invasive procedures such as percutaneous biopsies,1,2 pain management,3,4 abscess drainages,5 and radiofrequency ablation6 has gained popularity. These procedures all involve insertion of a needle towards a desired anatomical target. Image-guidance facilitates localization of the needle throughout the procedure, increasing accuracy, reliability and safety.7 US offers several advantages over other imaging modalities traditionally used in interventional radiology such as fluoroscopy, magnetic resonance imaging (MRI) and computed tomography (CT): It provides real-time visualization of the patient’s anatomy (including soft tissue and blood vessels) vis-à-vis the needle, without exposure to ionizing radiation.8 Additionally, being portable and low cost (compared to other imaging modalities) are the added advantages of US imaging.

Despite these advantages, the effectiveness of 2D US in needle guidance is highly operator dependent. In the in-plane approach, where needle shaft is parallel to the imaging plane, the needle shaft and tip should ideally be continually visible.9 However, aligning the needle shaft with the scan plane is difficult. Even when the needle is properly aligned, steep orientation (required in most procedures) of the needle with respect to the US beam causes nonaxial specular reflection of the US signal off the needle surface due to a large angle of incidence.10 In this imaging condition, the needle shaft will appear discontinuous and/or the tip will be invisible. This scenario is common with deep targets, for example during liver biopsies and epidural blocks. The challenge of needle visibility at increasing depths is compounded by attenuation of the US signal. Further, high intensity soft tissue artifacts, acoustic shadowing from dense structures such as bone and speckle noise obstruct needle visibility. To recover needle visibility, clinicians conduct transducer manipulation by translation or rotation, movement of the needle to and fro (pump maneuver),11 stylet movement, needle rotation, and hydrolocation.12 These techniques are variable and subjective. An invisible needle can have detrimental effects on procedures, for example, reduced procedure efficacy, increase in procedure duration, neural, visceral or vascular injury, and infection. Diagnostic accuracy of 90-95% has been reported for US guided breast biopsies,13-15 and 83-95% for US guided liver biopsies.16 It is known that targeting errors due to insufficient needle tip visualization contribute to false negative results.17 In pain management, accidental intraneural injections have been reported in 17% of ultrasound-guided upper- and lower-extremity blocks, even when the procedures were conducted by expert anesthesiologists.18,19 Most of these arise because of poor needle tip localization, which makes it difficult to distinguish between subfascial, subepineural, or intrafascicular injections.20

In our ongoing work, we have developed an algorithm for needle enhancement and tip localization in 2D US. This, we achieved by modelling transmission of the US signal.21 We incorporated US signal modeling into an optimization problem to estimate an unknown signal transmission map, which was then used to enhance the needle shaft and tip while considering US specific signal propagation constraints.22 Automatic tip localization was achieved using spatially distributed image statistics limited to the trajectory region. However, incorrect tip localization occurred when high intensity soft tissue interfaces were present along the needle trajectory. The algorithm also required a visible portion of the shaft close to the transducer surface, necessitating proper alignment of the needle with the scan plane.

We have also conducted preliminary work on needle detection and enhancement in three-dimensional (3D) US, a modality with potential to obviate the limitations of 2D US in needle guidance. Instead of the latter’s planar view (one slice at a time), 3D US displays volume data, allowing better visualization of anatomy and needle trajectory at all needle axis orientations. This alleviates the challenge of needle alignment in the scan plane.23 Nevertheless, needle obliquity at steep insertion angles, depth dependent attenuation, as well as acoustic shadowing, imaging artifacts and speckle remain.24,25 Needle visibility is also affected by low dimension of the needle with respect to the US volume. In fact, reported false-negative results for breast biopsies under 3D US show no improvement over those with 2D US.26,27. Consequently, 3D US has not replaced 2D US as the standard of care. To overcome the limitations, researchers have proposed computational methods for needle enhancement and localization in 3D US. These include: Principal component analysis based on eigen-decomposition,28 the 3D Hough transform,29 the 3D Radon transform,30 parallel integration projection,31 and iterative model-fitting methods such as random sample consensus (RANSAC)32. The accuracy of these methods is affected by attenuation and high intensity artifacts. Besides, computational complexity arises from processing the large amount of volume data.33 Projection based methods fail when a good portion of the shaft is not visible and the tip intensity is low. A more robust needle localization framework based on oscillation of a stylus was recently proposed, although it fails in a single operator scenario, especially for shallow angles.34 All the mentioned methods are based on modeling B-mode image data.

The current need, in interventional radiology for needle guidance, is a cost-effective, easy to use, non-radiation based real-time imaging platform with an ability of providing continuous guidance with high accuracy during needle insertion without intercepting the clinical workflow. Our long-term goal of developing a computational 3D US based imaging platform for enhancement and localization of needles is informed by this need. To address this pressing need, we hypothesize that automatic, real-time, accurate, and continuous target identification using 3D radiofrequency (RF) US data is feasible and potentially could be used to provide guidance during interventional radiology for needle insertion.Our preliminary work on modeling US signal transmission in 2D US, as well as needle detection and enhancement in 3D US, are strongly supportive that modeling the RF US signal coupled with advanced reconstruction methods will improve needle visualization and localization in 3D US. The envisaged 3D US reconstruction techniques will incorporate emerging work from machine learning and advanced beamforming to achieve needle enhancement and localization. We envision new pathways of processing and presenting US data, which should make this rich modality ubiquitous to all end-users for needle guidance in interventional radiology. The impact of the proposal will be multiplied since the developed algorithms, using open-source software platform, can also be incorporated as a stand-alone component into existing US imaging platforms.

(b) INNOVATION: Previous work on needle enhancement has mostly been focused on enhancement of B-mode images. B-mode images are derived from RF data (the raw signal backscattered onto the US transducer) after several proprietary processing steps. The raw signal is known to contain more statistical information35 which is lost along the processing pipeline. Parallel integral projection in order improve needle visibility in soft tissues using 2D and 3D RF data has previously been investigated although no image visualization, needle enhancement or localization was demonstrated.36 It has been shown that the post-beamformed 2D RF signal allows for a more improved enhancement of local features in US images. 37,38 Image enhancement methods applied to RF signal have also shown to produce improved display of orientation of a biopsy needle.37,38 This study is innovative in three respects: 1) To the best of our knowledge, it is the first to investigate needle enhancement and localization from 3D pre-beamformed RF data (previous approaches were using post-beamformed RF information). 2) The utilization of machine learning approaches, such as deep learning for needle enhancement in 3D US will be a first. 3) Although this pilot will focus on validating the developed framework on pain management and liver biopsy procedures as a case study, the new mathematical and computational approaches proposed in this work will lead to developments that can easily be adopted for enhancement and localization of needles in other interventional radiology procedures. We expect that the achieved results will lead in gradual adoption of 3D US as the standard of care in problematic minimally invasive procedures where 2D US is challenged, thus improving therapeutic and diagnostic value, reducing morbidity and optimizing patient safety.

(c) APPROACH: We propose to test the hypothesis that needle detection, enhancement and localization based on the raw 3D RF signal will provide a more accurate and robust platform for needle guidance than current state of the art. The basis for this hypothesis is found by precedent in the use of the RF signal for bone localization,39 and our published21,22 and unpublished work on needle enhancement and localization based on 2D/3D B-mode image data. This preliminary data is presented below.

Preliminary work 1 – Modeling 2D US signal transmission for Needle Shaft and Tip Enhancement

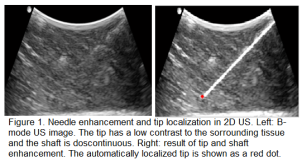

When the US signal pulses are sent by the transducer into tissue, they undergo reflection, scattering, absorption and refraction. These phenomena all contribute to attenuation; the loss in intensity of the US pulses as they travel deeper into tissue. Attenuation is responsible for non-conspicuity of the needle tip and shaft at increasing depths. Previously, we have shown that modeling signal transmission in 2D US based on 2D image data, while considering depth-dependent attenuation leads to enhancement of the needle and more accurate tip localization.21 The modelling framework yields signal transmission maps, which are then used in an image-based contextual regularization process to achieve tip and shaft enhancement (Fig.1). A tip localization accuracy of

When the US signal pulses are sent by the transducer into tissue, they undergo reflection, scattering, absorption and refraction. These phenomena all contribute to attenuation; the loss in intensity of the US pulses as they travel deeper into tissue. Attenuation is responsible for non-conspicuity of the needle tip and shaft at increasing depths. Previously, we have shown that modeling signal transmission in 2D US based on 2D image data, while considering depth-dependent attenuation leads to enhancement of the needle and more accurate tip localization.21 The modelling framework yields signal transmission maps, which are then used in an image-based contextual regularization process to achieve tip and shaft enhancement (Fig.1). A tip localization accuracy of  mm was achieved in ex vivo tissue. However, the localization accuracy is lower when soft tissue interfaces are present along the needle trajectory, and when the needle is not properly aligned in the scan plane. In the context of this proposal, our objective is to apply similar US signal modeling and contextual regularization, this time based on RF data.

mm was achieved in ex vivo tissue. However, the localization accuracy is lower when soft tissue interfaces are present along the needle trajectory, and when the needle is not properly aligned in the scan plane. In the context of this proposal, our objective is to apply similar US signal modeling and contextual regularization, this time based on RF data.

Preliminary work 2 – Machine learning approaches for needle detection and enhancement in 3D US

Since 3D US is multiplanar, the challenge associated with needle alignment in the scan plane is partially eliminated when it is used in needle guidance. Nevertheless, 3D US is also affected by US signal attenuation. Previous methods proposed for needle enhancement and localization in 3D US did not address this need. In addition, most were computationally demanding because of the requirement to process the entire US volume. In this work (results submitted to 20th MICCAI conference, 2017), we have developed a learning-based method for automatic needle detection in 3D US volumes. The pixel-wise classifier generates a sub-volume containing only slices with needle information. In so doing, computational complexity on the subsequent enhancement and localization algorithms is reduced (Fig.2). The tip is automatically localized in 3D. We achieved 88% detection precision, 98% recall rate, a slice classification time of 0.06 seconds, a localization accuracy of  mm, and a training time of 15 seconds.

mm, and a training time of 15 seconds.

|

|

Figure 2. Learning based needle detection, enhancement and localization in 3D US. Top row: an example of needle detection. Here, the original volume contained 41 slices, and the classifier identified only 7 containing needle data. Bottom row: The enhancement process on the sub-volume. Left, enhanced intensity projection image. Middle, automatically localized tip (red) displayed on the relevant axial slice. The blue cross is the manually localized tip. Right, trajectory estimation indicated by the green line. |

Specific Aim 1. To develop RF-signal modeling algorithms for improved 3D US image reconstruction

For this aim, we hypothesize that adaptive beamforming methods applied to pre-beamformed 3D RF data will enhance needle visibility and improve quality of US volumes. During the formation of an US image, the reflected US signals are received by the transducer elements at different time points due to varying signal travel distances. Beamforming on each scan line is meant to establish signal synchronism before aggregation. The conventional method of beamforming in both 2D and 3D US is delay and sum (DAS). Here, received signals are electronically delayed, followed by application of a beamformer whose weights are reliant on echo signals, leading to undesirable wide main-lobe and high side-lobe levels resulting in imaging artifacts, thus decreasing the image resolution and contrast. 40 In this architecture, the angular resolution is dependent on the length of the scan aperture and the fixed operating frequency.41 In a fixed hardware configuration, these parameters cannot be increased, hence resolution cannot be improved. To overcome this challenge, adaptive beamforming methods based on minimum variance42-45 and multi-beam covariance matrices46 have been proposed. Using adaptive beamformers signal detection can be maximized while minimizing the beam-width and side lobe artifacts.47,48 Recently, phase factor beamforming, where phase variations are tracked across the receive aperture domain, has been shown to improve the appearance of bone surfaces from 2D US data49. Bone features, similar to needle features, are hyper-echoic when imaged with US. Therefore, during this aim we will develop an adaptive phase-factor beamforming method in order to enhance the hyper-echoic targets such as the needle from 3D pre-beamformed RF data. Specifically, adaptive beamformer that combines ideas from Minimum Variance (MV) adaptive beamforming,50 signal regularization based on statistical information in RF data,51 and Toeplitz structure covariance matrices52 to minimize computational complexity will be investigated. It is expected that this reconstruction technique will adapt the data to the clinical application of needle enhancement through improving image resolution, contrast, and speckle suppression. The algorithms will be incorporated into an open source imaging platform for real-time data collection and processing.

Overall, we expect that the algorithms developed in Aim 1 will allow enhanced representation of US needle data with increased diagnostic value. The images obtained from this aim will be used as an input to the algorithms proposed in Aim2.

Specific Aim 2. To develop methods for needle enhancement and tip localization in 3D US images

Our working hypothesis for this aim is that learning based approaches for needle detection coupled with image reconstruction methods in 3D US will achieve improved needle enhancement and tip localization. In our previous work, we have shown that a linear learning based pixel classifier for needle data in 3D US, based on local phase based image projections, improves needle enhancement and reduces computational load. The detector utilizes Histogram of Oriented Gradients (HOG)53 descriptors extracted from local phase projections and a linear support vector machine (SVM) baseline classifier. Recently, deep learning (convolutional neural network (CNN)) based image processing approaches have shown to produce very accurate results for segmentation of medical image data54. However, enhancement or segmentation of needles from US data using convolutional neural networks has not been investigated yet. Therefore, for during this aim we will develop a needle enhancement and segmentation method using convolutional neural networks. Needle images with various insertions angles and depths will be labeled by an expert radiologist. Our clinical collaborator Dr. Nosher and several radiologists from RWJMH will be involved during this labeling process. We will use two different datasets during the labeling process. The first data set will be retrospective US images downloaded from the Robert Wood Johnson Medical Hospital (RWJMH) database. Specific focus will be given to liver biopsy and epidural management procedures where US has been used to guide the needle insertion and biopsy procedure. The second data set will involve collecting needle US scans using ex vivo tissue samples as the imaging medium. These scans will be collected at the PI’s laboratory using an open source platform US machine with 3D imaging capabilities. The collected ex vivo data will be enhanced using the beamforming methods developed in Aim 1. Labeling process will involve manual identification of the needle tip and shaft from the two datasets. A fully convolutional neural network54 will be trained using the labeled data. The architecture of this network does not require extensive data sets in order to train the network and yields high segmentation results. Previously this approach was used for segmenting cell structures54. The output of this operation, which will be a fuzzy 3D probability map (high probability regions corresponding to needle interface), will be used as an input to our previously developed needle tip localization method. The automatically identified needle tips will be compared against the manually identified needle tips. More details about the specific clinical data collection and validation are provided in Specific Aim 3 and Protection of Human Subjects.

Overall, at the end of Aim 2 we expect to have a system providing continuous real-time monitoring of needle insertion using 3D US for improved guidance in interventional radiology procedures.

Specific Aim 3. To validate the developed imaging platform on clinical data

To validate the algorithms developed in Aims 1-2, we plan to perform extensive validation on ex vivo and clinical data. No clinical trial will be conducted during this proposal. Our initial validation will be limited to epidural administration and liver biopsy procedures.

Ex vivo data: This study will be conducted for validating Aims 1-2. US scans will be collected from two different needles: 1-) A general 17-gauge Tuohy epidural needle (Arrow International,Reading, PA, USA), and 2-) 18-gauge biopince full core liver biopsy needle (Argon Medical devices, Athens, Texas, USA). The needles will be inserted at varying insertion angles (30−70) and depths (up to 12 cm). Ex vivo porcine, bovine, liver, kidney and chicken tissue samples will be used as the imaging medium. 3Dpre-beamformed RF data will be collected using a SonixTouch US system (Analogic Corporation, Peabody, MA, USA) equipped with the 3D phased array transducer. The US machine, provides an open-source research interface allowing for custom-made applications directly run on the machine, and the 3D transducers. The image resolution for different depth settings will vary from 0.1mm to 0.3mm. In total, we will collect 300 different 3D US scans for each tissue sample (making the total number equal to 1500 3D US scans). The collected scans will be enhanced using algorithms developed in Aim1. From the enhanced data, our clinical collaborators will manually identify the needle tips. Three different radiologist, with varying expertise, will be involved during the validation process in order to calculate the inter-user variability error. We will also ask the same users to repeat the needle tip identification process after two weeks to assess the intra-user variability error. The labeled data will be used in order to train the CNN proposed in Aim2. For testing the CNN algorithm, we will collect additional new 500 US scans. The manually identified needle tip locations, from the new dataset, will be compared to the automatically extracted needle tip locations obtained from the algorithms developed in Aims1-2. Euclidean distance error between the two tip locations (manual vs automated) will be calculated for quantitative validation.

Clinical data: This study will involve collection of retrospective US data from patients who are enrolled for a liver biopsy or epidural administration as part of their standard of care. Women and minorities will be appropriately represented in the recruited patients. Sex or race will not play a role as an inclusion or exclusion criteria. Specific focus will be given to patients who are 21 years and older and require a liver biopsy or epidural administration. All the US data and the patient information (age, sex, height, weight, and laboratory data) will be assigned a non-identifying alpha-numeric code that will ensure that the risk of re-identification of participants from the acquired data is not possible. Additional information is included in the ‘Protection of Human Subjects’. In total, we will collect 1600 different US scans, from 400 patients. For labeling (manual tip and needle shaft localization) in order to train the CNN method developed in Aim2 we will use 1200 scans. During testing, 400 US scans, not part of the training dataset, will be used. Again expert radiologist will be involved during labeling and testing procedures for tip and shaft identification. Error calculations will involve calculating Euclidean distance between the two tip locations (manual vs automated).