Heat Orchestration

Chapeter 5 Heat Orchestration

5.1 Brief Details of Heat

Heat is the main project for orchestration part of OpenStack. Implementation of orchestration engine for multiple composite cloud application. It is the sequence of lines code in text file format. A native heat format can be evolving, but heat also endeavors to provide compatibility with AWS cloud information template format so that many existing cloud formation template can be launch on OpenStack. Heat provide both open stack rest API and cloud formation compatible query API. The orchestration is essentially for the software application. To manage configuration. Instead of manipulation of virtual infrastructure by hand or with the script

Heat focuses to work with the declarative model. Heat works out on the sequence of lines to perform and to bring reality in to model. The model takes the heat template and the resulting collective of infrastructure resources is known as the stack. Orches,ration allows you to treat your infrastructure like code. Therefore you can store your templates version control system, such as GIT to track changes then you update the stack with the new template and heat do the rest of the actions. The main interface of heat is the OpenStack native rest API. Heat actually is between the user and the API of the core OpenStack services. In much the same way as the dashboard or the horizon does. Heat can be access through the horizon or the dashboard. Heat template describes the cloud application infrastructure in the code format that is changeable. The heat infrastructure resources include servers, floating IP, volume, security groups, and users.

5.2 Auto Scaling

Heat also provides auto scaling that integrates with ceilometer. Ceilometer adds scaling group as the resource within templates. Furthermore, the template, defines the relationship between two Auto scaling by heat integrated with ceilometer that leads to add scaling group in template. The templates defines the relationship between two resources. It also able heat to call OpenStack API in order to make everything systematic. Openstack also manage the whole lifecycle of the application. You need to do the modification in the code for existing stack and heat deals with the rest in order to change something.

Heat architecture components include:

Heat API It is used for processing API request to Heat engine via AMQP. It implements an Open stack-native RESTful API

HEAT-api-cfnit is used API compatibility with AWS cloud formation.

HEAT ENGINE is main orchestration functionality.

Heat uses back-end database for maintaining state information as other OpenStack services. Both communicate with heat engine via ANQ. The heat engine is the actual layer where actual integration is implemented. Furthermore, for high availability, Auto scaling abstraction is also done.

Auto Scaling Heat Templates

In this auto scaling example, Heat and Ceilometer will be used to scale CPU bound virtual machines. Heat has the concept of a stack which is simply the environment itself. The Heat stack template describes the process or logic around how a Heat stack will be built and managed. This

is where you can create an auto-scaling group and configure Ceilometer thresholds. The environment template explains how to create the stack itself, what image or volume to use, network configuration, software to install and everything an instance or instances need to properly function. You can put everything into the Heat stack template, but separating the Heat stack template from the environment is much cleaner, at least in more complex configurations such as auto scaling.

5.3 Deployment of Heat Orchestration

5.3.1 ENVIRONMENT TEMPLATE

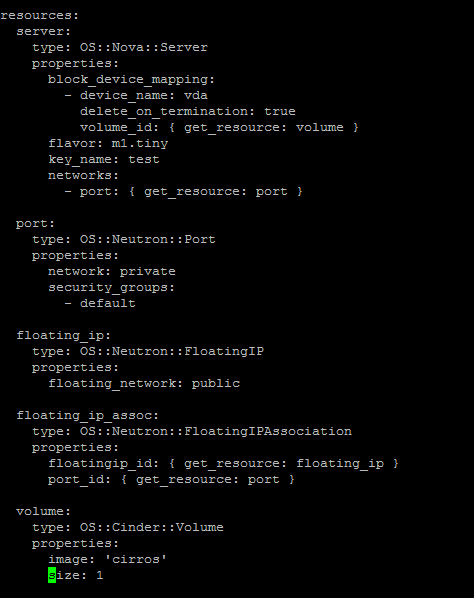

Below we will create an environment template for a cirros image.As shown in FIG.5.3. The Cirros image will create the instance template, configure a cinder volume, add IP from the private network, add floating IP from the public network, add the security group, private ssh-key and generate 100% CPU load through user-data.

Hot is the new template format that to replace the Heat CloudFormation-Compatible format as native format supported by heat.They are written in YAML format and JSON. Hot templates create

Stack in Heat. Structure for Hot consist of Heat Template version, description, parameter groups, parameters, resources, and outputs.

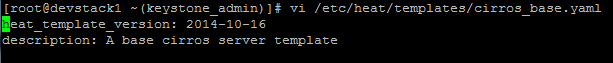

- Heat Template Version: Is just value with the key that indicates that the YAML document is a hot template of the specific version, if the date is 2013-05-23 or later date.Shown in FIG 5.1

Fig.5.1 (Heat Template version)

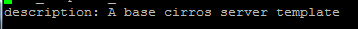

- Description: It’s an optional key allows for giving a description of the template.

Fig.5.2 (Description of heat template)

- Parameters_groups: This section allows for specifying how the input parameters should be grouped and order to provide the parameter in. This option is also optional

- Parameter: This section allows for specifying input parameters that have to provide when instantiating the templates. This option is also optional as well

- Outputs: This part allows for specifying output parameters available to users once the template has been instantiated.

-

Resources: It defines actual resources that are real stack from HOT template (instance for Compute, Network, Storage Volume).Each resource is defined as a separate block in input parameters. As shown in FIG 5.3 there are five separate sections. Servers, port, volume, floating IP

- Resource ID: must always be unique for every section

- Resource Types: Must relate to the service that section of template define Such as the following

Nova:: Server

Neutron:: Port

Neutron:: FloatingIP

Neutron:: FloatingIPAssociation

Cinder:: Volume

- Properties: It is a list of resource specific property defines via the function.

FIG.5.3 (Heat Template Resources)

Now that we have an environment template, we need to create a Heat resource type and link it above file /etc/heat/templates/cirros_base.yaml.

resource_registry:

“OS::Nova::Server::Cirros”: file:///etc/heat/templates/cirros_base.yaml

5.3.2 Heat Template:

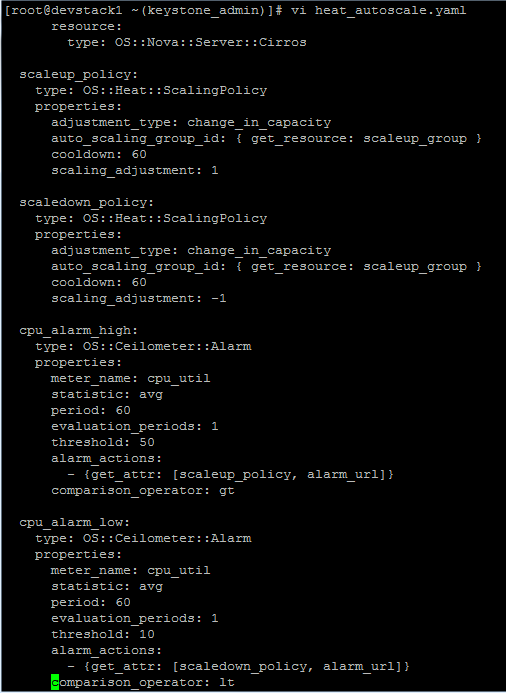

The below template in FIG.5.4 defines the behavior of the stack e.g when and under what conditions the stack will scale up and scale down. cpu_alarm_high and cpu_alarm_low are used in the template to scale up and scale down our environment.

FIG.5.4 (Behavior Of Stack)

Update Ceilometer Collection Interval

By default, Ceilometer will collect CPU data from instances every 10 minutes. For this example, we want to change that to 60 seconds. Change the interval to 60 in the pipeline.YAML file and restart

OpenStack services.

Check the status of the stack in Horizon Dashboard:

Heat will create one instance as per defined policy:

5.3.3 RUNNING THE STACK:

Run the following command to run the stack:

[[email protected] ~(keystone_admin)]# heat stack-create heat_autoscale -f /root/heat_autoscale.YAML -e /root/environment.yaml

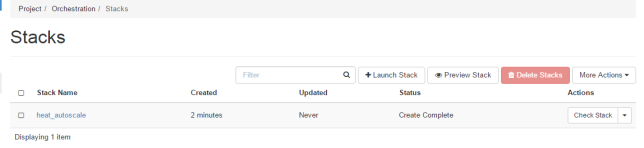

Check the status of the stack in Horizon Dashboard as in FIG 5.4:

FIG5.5 (Heat stack status )

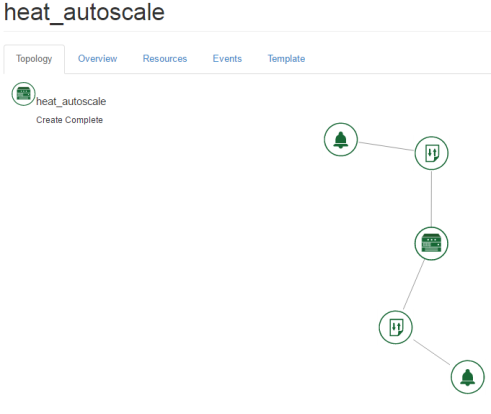

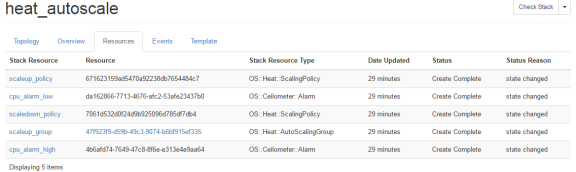

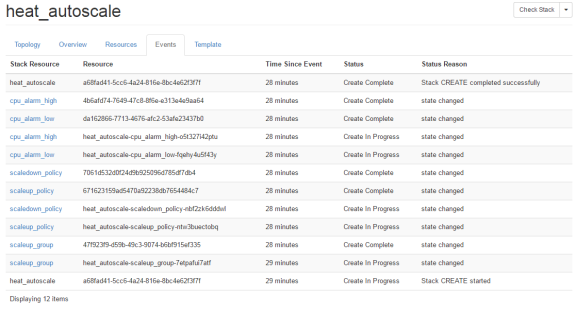

In FIG 5.5 and FIG 5.6 shows the heat stack topology and resources, Events are also shown in FIG 5.6

FIG 5.6 (Heat Stack Topology)

FIG 5.7 (Heat Stack Resources)

FIG 5.8 (Heat Stack Events)

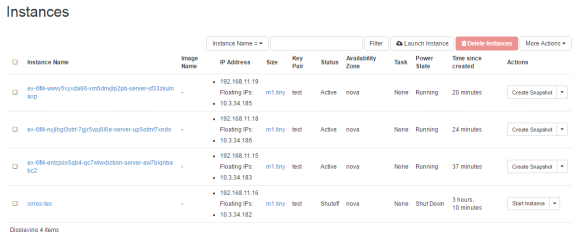

Heat will create one instance as per defined policy in FIG 5.7:

FIG 5.9 (Heat Stack Instance)

Automatic Scale UP:

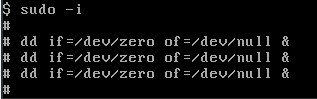

Now we will increase the cpu utilization on one of the instances and will verify if heat autoscales

the environment or not. To do that run the following commands one the instance that heat created from the stack. As shown in FIG 5.8.

FIG 5.10 (Heat Autoscaling)

The heat created two more instances based defined policy in the orchestration template. This is because the maximum scale up policy is 3 instances. As shown in FIG 5.8.

FIG 5.11 (Two Instance base on policy)

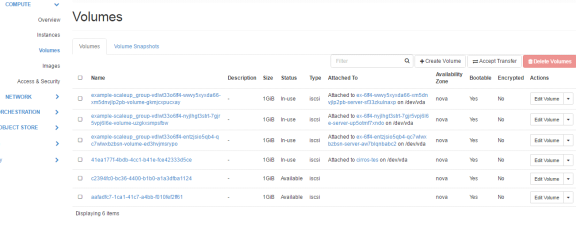

List of volumes that heat created based on defined policy threshold as shown in FIG 5.9:

FIG.5.12 (Volumes that heat created based on defined policy)

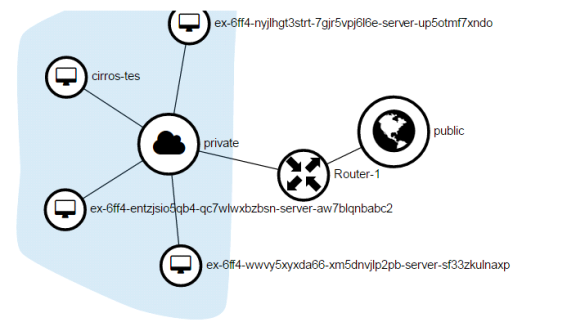

New Network Topology after adding instances to the private network in FIG 5.10

FIG 5.13 (Heat Topology after 2 instances)

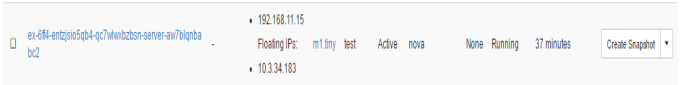

5.3.4 SCALE DOWN:

Scale down is the process in heat. Heat automatically scales down once the CPU utilization goes down on the instances. As the load goes back to normal and CPU cools down. The extra instances that were appeared to overcome the load will go back to one instance and all instances will be used efficiently through this way. In our scenario instance “aw7blqnbabc2” is the original instance and the rest instances are to overcome the load.